Introduction

In a previous post, we talked about statistics in general and descriptive statistics in detail. It will be better if one read that post before reading this. But for a recap, here is what we said.

- Statistics is the practice of collecting and analyzing numerical data.

- There are two types of statistics - descriptive statistics and inferential statistics.

- Descriptive statistics help us describe/summarize the data in our hand. It includes methods for calculating central tendency like mean, median, and mode or methods for calculating variability like range, interquartile range, variance, and standard deviation.

- Inferential statistics is simply used to interpret the meaning of descriptive statistics. So descriptive statistics summaries our data while inferential statistics help us derive meaning from the summarized data.

In this post, we will deep dive into inferential statistics. But, before we get there, we need to revise some school time math.

Probability

Anyone passing through 8th grade already knows what probability is, but for the sake of completeness, let’s quote the definition from Google.

Probability is the extent to which an event is likely to occur, measured by the ratio of the favourable cases to the whole number of cases possible.

In simple terms, probability is the measure of how likely an event might occur. The equation for calculating probability is simple.

Points to be noted:

- An experiment whose outcome is uncertain is called a random experiment.

- That means a random experiment will have multiple outcomes. A set of these outcomes are called sample space.

- Each outcomes occurring individually or together is called an event.

- If two or more events do not have common outcomes, they’re called disjoint events.

- Otherwise, they’re called non-disjoint events.

- A good example of disjoint events would be rolling dice. We can only get one or the other outcome. No two or more outcome is possible at the same time.

- A good example of non-disjoint events would be rolling 2 dice. We can get 6 in one dice and simultaneously get 6 in the other. This is possible for any 2 given values in the two dice.

- In simple terms, an outcome in a disjoint event completely blocks all other outcomes from ever happening. Meanwhile, an outcome in a non-disjoint event does not block the other outcomes.

Probability distribution

When we’re dealing with a single isolated event with fixed outcomes, it is easy to calculate probability. But when there is a whole set of events, it becomes tiresome to calculate the probabilities individually. The best way to overcome this is to define a probability distribution function. We can pass outcomes as values to the function and it will give us the probability of the outcome.

Random variable

This is often a confusing term for people learning probability for the first time. But really, it’s simple. As we already mentioned above, an experiment with multiple outcomes is called a random experiment. A set of all the outcomes of a random experiment is called sample space. A random variable takes the value inside the sample space whose probability we would like to calculate. In other terms, a random variable maps the values inside the sample space. It is usually denoted by X.

Discrete random variable

If the sample set has discrete values, then the random variable is called a discrete random variable. For example, rolling a dice. It has the following set of outcomes.

s = {1, 2, 3, 4, 5, 6}X can have each of these values. Probability is P(X=x) or just P(X). Here P(X) is the probability function.

Hence,

Probability of 1 is P(X=1) or P(1)

Probability of 2 is P(X=2) or P(2)

Probability of 3 is P(X=3) or P(3)

Probability of 4 is P(X=4) or P(4)

Probability of 5 is P(X=5) or P(5)

Probability of 6 is P(X=6) or P(6)Notice how here X is having discrete values from 1 to 6. Hence, X is called a discrete random variable.

Continuous random variable

In data science, we don’t have the luxury of just dealing with experiments having discrete outcomes. For example, we are required to create an algorithm that will measure the amount of rainfall. Normally we hear predictions like “there will be 2 cm of rain tomorrow”. But let us be clear, there is nothing called 2 cm of rain. Even if a milligram of additional water comes down, our calculation is wrong. So saying that there is exactly 2 cm of rain is predicting something with zero or near-zero probability. And by logic, who would predict an outcome that has zero or near-zero probability? Normally, events with the highest probability are used as the prediction.

When they say there will be 2 cm of rain tomorrow, what they actually mean is that there will be about 2 cm of rain. The amount of rain could be 1.9999 or 2.0001. Hence there is a range in between where the amount of rain is highly probable. The number of values between this range is infinite. We can have any number of values between 1.9999 and 2.0001 which will all come under the range.

Here X stores a value that continues from 1.9999 to 2.0001 hence the term continuous random variable.

Probability density function

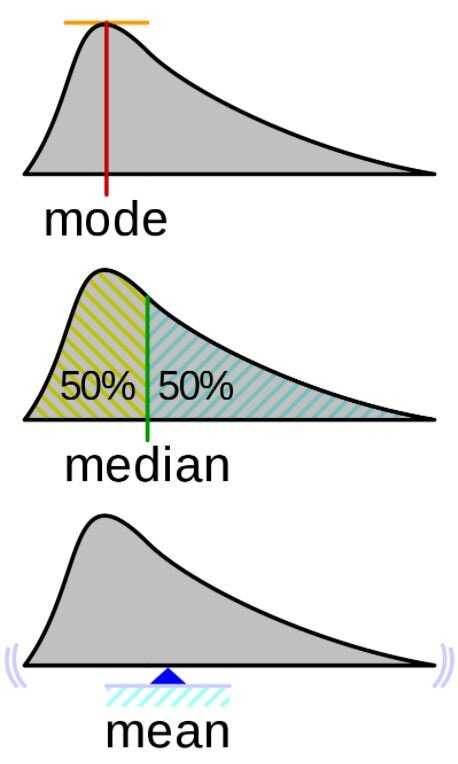

In data science, we’ll mostly be dealing with a continuous random variable. The probability density function estimates the probability of a continuous random variable. To understand how it works, let’s first visualize how a PDF looks by looking at its graph.

Probability is marked in the y-axis and outcomes are marked in the x. When we’re calculating probability of a continuous random variable, we’re dealing with a range of outcomes. Hence we can use integral to calculate the probability of the range.

One must have noticed how mode (the most repeated value in our sample set) has the highest probability. Logically, it is correct. We can divide PDF by the median (central value of the set) and get equal probability on either side.

The entire area under the curve will be 1 as the sum of all probabilities will equal 1.

Normal Distribution

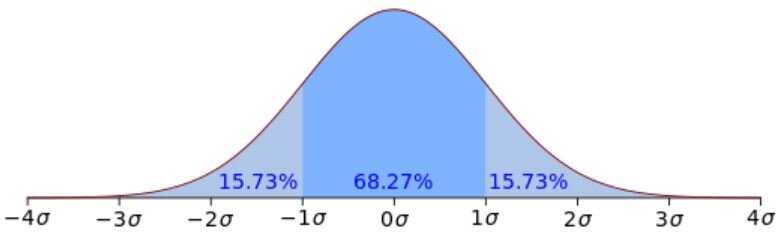

A distribution is termed “normal” when the values near the mean are more probable than values away from the mean. A sample data set from a natural phenomenon would ideally imitate a normal distribution.

In the distribution, the centre of the curve will be determined by mean and height will be determined by the standard deviation. The below image represents the PDF curve of a normal distribution.

The idea behind the curve is that the data near the mean will occur more frequently than data away from the mean. The curve thus generated is called a bell curve. This is very important when the distribution of the random variable (as often happens in real-world scenarios) is not known. It will make more sense once we cover the central limit theorem.

Central limit theorem

The central limit theorem essentially states that if we have a huge population that we divide into many samples, the mean of the samples will be approximately the same as the mean of the whole population.

Here we don’t know the probability distribution of the whole population. But we observe that the probability distribution of a sample of that population follows a normal distribution. Hence values near the mean are more probable than values away from the mean. The bigger the sample, the greater the accuracy of the calculation.

A real-life example would be the calculation of the average age of a country. We cannot go around asking each person for their age. So we take a sample of our country’s population and ask for their age. Then we take the mean of that sample. It is assumed that mean of that sample will be approximately the same as the mean of the whole population. We can take multiple samples to see if their means match.

Types of probability

1. Marginal probability

Marginal probability is the probability of an event occurring irrespective of the outcome of another variable.

2. Conditional probability

Conditional probability is the probability of an event occurring after another event has already occurred.

3. Joint probability

Joint probability is the probability of two events happening at the same time.

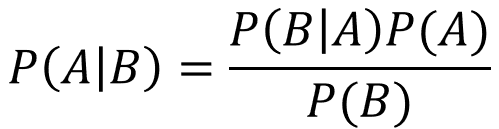

Bayes’ theorem

Bayes theorem is used to calculate conditional probability.

P(A | B) - Probability of A given B has already happened

P(B | A) - Probability of B given A has already happened

P(A) - Probability of A

P(B) - Probability of B

On the LHS, we have the conditional probability we want to estimate. On the RHS, we have probabilities of certain preconditions we want to meet.

Bayes’ theorem becomes useful when we already have a set of preconditions. For example, the Gmail spam filtering algorithm. They have a set of preconditions to identify potential spam emails. Now, we can estimate the probability that a given mail is a spam by calculating the conditional probability.

Another example would be age-related diseases. We can estimate the likelihood of an age-related disease using age as a precondition.

Inferential statistics

It is finally time to discuss inferential statistics. We already discussed descriptive statistics in a previous post as already mentioned above. We also said how inferential statistics is about finding the meaning of descriptive statistics.

Point estimation

Point estimation is a method to find a single value from a sample that would serve as the best estimate for an unknown population parameter. We already discussed an example relating to point estimation when discussing the central limit theorem. Let’s quote from above.

A real-life example would be the calculation of the average age of a country. We cannot go around asking each person for their age. So we take a sample of our country’s population and ask for their age. Then we take mean of that sample. It is assumed that mean of that sample will be approximately same as mean of the whole population. We can take multiple samples to see if their means match.

Here average age is the parameter we are looking to find from the whole population. The parameter is unknown and physically impossible to be gauged. This method of using samples to arrive at an estimate for the whole population is called point estimation.

Here the function that is used to calculate the average age is called an estimator. The average age itself is called the estimate.

Ways to calculate the estimate

- Method of Moments: In this method we know certain facts about the population. We then extend these ideas into the sample. The above case is a typical example. We know a country’s population has an average age. We extended that to a sample to calculate it. The average age becomes the estimate.

- Maximum of Likelihood: In this method, a model is first made. Then values in that model are used to maximize a likelihood function. Maximize here just means optimizing the function so that outcome becomes the most probable in the assumed statistical model. We use joint probability of events in the sample and population for this process.

- Bayes’ Estimators: Bayes’ estimator has to do with Bayes’ theorem. The way it works is by minimizing the risk. As we already learned, Bayes’ theorem estimates conditional probability when certain conditions are met. It is a way of minimizing risk.

- Best Unbiased Estimators: This is a way of using several methods to arrive at the estimation. The estimated parameter will have different values based on which method is employed. One of the values will be the best and it depends on the parameter we’re trying to calculate.

Interval estimation

Unlike point estimation, in interval estimation, a range of values is used to estimate a parameter of the population. The upper point of the range is called the upper confidence limit and the lower point of the range is called the lower confidence limit.

If we were asked how much time it takes to travel to school, in a point estimation, we’ll answer an exact time like “12 minutes”. But if we’re using interval estimation, the answer will be a range, like between 10 and 15 minutes. Here 10 is the lower confidence limit and 15 is the upper confidence limit.

Confidence interval and confidence level

When we were dealing with point estimation, the estimate was a point. In interval estimation, the estimate will be an interval as exemplified above.

Confidence interval is a measure of our confidence that the mean of our population is contained in the interval estimate. It gives us an idea about the amount of uncertainty associated with a given estimate.

We can set a confidence level before we arrive at our estimate. Typically this is either 95% or 99%.

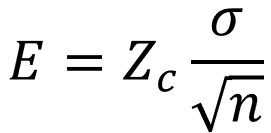

Margin of error

Margin of error is the distance between the point estimate and the value of the parameter it is estimating. Generally, we only know the value of the parameter of the sample. We assume that it is the same for the population. That means we cannot directly calculate the margin of error. In order to accomplish it, we use the confidence interval. The equation for finding the margin of error is as follows.

E - Margin of error

Zc - Confidence interval

σ - Standard deviation

n - Size of the sample

The value of the confidence interval is taken from the Z-score table.

Hypothesis testing

When we’re using sample data, we hypothesize that the parameter value of the sample will be equal to the parameter value of the whole population. Hypothesis testing is a way to check whether our sample has all the characteristics needed to be representative of the whole population. It is achieved by calculating probability.

When we keep repeating an experiment, the probability of our desired outcome increases. That is just by the virtue of conducting more experiments. When sufficiently enough experiments are conducted and we still don’t have the desired outcome, we know the sample lacked the qualities to be representative of the whole population. This is called hypothesis testing.